For a year or so now, “innovation” has been bobbing around at the very top of the memepool. Everybody wants to bottle the stuff and mix it into their corporate water supplies.

I’ve been on the bandwagon too, I confess. It fascinates me — where do ideas come from and how do they end up seeing the light of day? How does an idea become relevant and actionable?

There’s a recent commercial for Fedex where a group of pensive executives are sitting around a conference table, their salt-and-pepper haired and square-jawed CEO (I assume) sitting at the head of the group and a weak-chinned, rumpled and dorky underling sitting next to him. The CEO asks how they can cut costs (I’m paraphrasing) and the little younger dorky guy recommends one of Fedex’s new services. He’s ignored. But then the CEO says exactly the same thing, and everybody nods in agreement and congratulates him on his genius.

The whole setup is a big cliche. We’ve seen it time and again in sitcoms and elsewhere. But what makes this rendition different is how it points out the difference in delivery and context.

In looking for a transcript of this thing, I found another blog that summarizes it nicely, so I’ll point to it and quote here.

The group loudly concurs as the camera moves to the face of the worker who proposed the idea in the first place. Perplexed, he declares, “You just said what I just said only you did this,†as he mimics his boss’s hand motions.

The boss looks not at him, but straight ahead, and says, “No, I did this,†as he repeats his hand motion. The group of sycophants proclaims, “Bingo, Got it, Great.†The camera captures the contributor, who has a sour grimace on his face.

(Thanks Joanne Cini for the handy recap.)

What it also captures is the reaction of an older colleague sitting next to the grimacing dorky guy who gives a little nod to him that shows a mixture of pity, complicity in what just happened, and a sort of weariness that seems to say, “yeah, see? that’s how it works young fella.”

It’s a particularly insightful bit of comedy. It lampoons the fact that so much of how ideas happen in a group environment depends on context, delivery, and perception (and here I’m going to pick on business, but it happens everywhere in slightly different flavors). Dork-guy not only doesn’t get the language that’s being used (physical and tonal), but doesn’t “see” it well enough to even be able to imitate it correctly. He doesn’t have the literacy in that language that the others in the room do, and feels suddenly as if he’s surrounded by aliens. Of course, they all perceive him as alien (or just clueless) as well.

I know I’m reading a lot into this slight character, but I can’t help it. By the way, I’m not trying to insult him by calling him dork-guy — it’s just the way he’s set up in the commercial; I think the dork in all of us identify with him. I definitely do.

In fact, I know from personal experience that, in dork-guy’s internal value matrix, none of the posturing means a hill of beans. He and his friends probably make fun of people who put so much weight on external signals — they think of it as a shallow veneer. Like most nerdy people, the assumption is that your gestures, haircut or tone of voice doesn’t affect whether you win the chess match or not. But in the corporate game of social capital, “presence” is an essential part of winning.

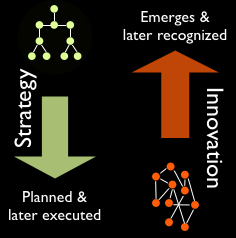

Ok, so back to innovation. There’s a tension among those who talk and think about innovation between Collective Intelligence (CI) and Individual Genius (IG). To some degree there are those who favor one over the other, but I think most people who think seriously about innovation and try to do anything about it struggle with the tension within themselves. How do we create the right conditions for CI and IG to work in synergy?

The Collective Intelligence side has lots of things in its favor, especially lately. With so many collective, emergent activities happening on the Web, people now have the tools to tap into CI like never before — when else in history did we have the ability for people all over the world to collaborate almost instantaneously in rapid conversation, discussion and idea-vetting? Open Source philosophy and the “Wisdom of Crowds” have really found their moment in our culture.

I’m a big believer too, frankly. I’m not an especially rabid social constructivist, but I’m certainly a convert. Innovation (outside of the occasional bit that’s just for an individual privately) derives its value from communal context. And most innovations that we encounter daily were, in one way or another, vetted, refined and amplified by collaboration.

Still, I also realize that the Eureka Moments don’t happen in multiple minds all at once. There’s usually someone who blurts out the Eureka thought that catalyzes a whole new conversation from that “so perfect it should’ve been obvious” insight. Sometimes, of course, an individual can’t find anyone who hears and understands the Eureka thought, and their Individual Genius goes on its lonely course until either they do find the right context that “gets” their idea or it just never goes anywhere.

This tension betwen IG and CI is rich for discussion and theorizing, but I’m not going to do much of that here. It’s all just a very long setup for me to write down something that was on my mind today.

In order for individuals to care enough to have their Eureka thoughts, they have to be in a fertile, receptive environment that encourages that mindset. People new to a company often have a lot of that passion, but it can be drained away long before their 401k matching is vested. But is what these people are after personal glory? Well, yeah, that’s part of it. But they also want to be the person who thought of the thing that changed everybody’s lives for the better. They want to be able to walk around and see the results of that idea. Both of these incentives are crucial, and they’re both important ingredients in the feed and care of the delicate balance that brings forth innovation.

Take the Fedex commercial from above. The guy had the idea and he’ll see it executed. Why wouldn’t he be gratified to see the savings in the company’s bottom line and to see people happier? Because that’s only part of his incentive. The other part is for his boss, at the quarterly budget meeting, to look over and say “X over there had a great idea to use this service, and look what it saved us; everybody give a round of applause to X!” A bonus or promotion wouldn’t hurt either, but public acknowledgement of an idea’s origins goes a very very long way.

I’ve worked in a number of different business and academic environments, and they vary widely in how they handle this bit of etiquette. And it is a kind of etiquette. It’s not much different from what I did above, where I thanked the source of the text I quoted. Maybe it’s my academic experience that drilled this into me, but it’s just the right thing to do to acknowledge your sources.

In some of my employment situations, I’ve been in meetings where an idea I’ve been evangelizing for months finally emerges from the lips of one of my superiors, and it’s stated as if it just came to them out of the blue. Maybe I’m naive, but I usually assume the person just didn’t remember they’d heard it first from me. But even if that’s the case, it’s a failure of leadership. (I’ve heard it done not just to my ideas but to others’ too. I also fully acknowledge I could be just as guilty of this as anyone, because I’m relatively absent-minded, but I consciously work to be sure I point out how anything I do was supported or enhanced by others.) It’s a well-known strategy to subliminally get a boss to think something is his or her own idea in order to make sure it happens, but if that strategy is the rule rather than the exception, it’s a strong indicator of an unhealthy place for ideas and innovation (not to mention people).

But the Fedex commercial does bring a harsh lesson to bear — a lesson I still struggle with learning. No matter how good an idea is, it’s only as effective as the manner in which it’s communicated. Sometimes you have no control over this; it’s just built into the wiring. In the (admittedly exaggerated, but not very much) situation in the Fedex commercial, it’s obvious that most of the dork-guy’s problem is he works in a codependent culture full of sycophants who mollycoddle a narcissistic boss.

But perhaps as much as half of dork-guy’s problem is that he’s dork-guy. It’s possible that there are some idyllic work environments where everyone respects and celebrates the contributions of everyone else, no matter what their personal quirks. But chances are it’s either a Kindergarten classroom or a non-profit organization. And I happen to be a big fan of both! I’m just saying, I’m learning that if you want to play in certain environments, you have to play by their rules, both written and unwritten. And I think we all know that the ratio of unwritten-to-written is something like ten-to-one.

In dork-guy’s company, sitting up straight, having a good haircut and a pressed shirt mean a lot. But what means even more is saying what you have to say with confidence, and an air of calm inevitability. Granted, his boss probably would still steal the idea, but his colleagues will start thinking of him as a leader and, over time, maybe he’ll manage to claw his way higher up the ladder. I’m not celebrating this worldview, by the way. But I’m not condemning it either. It just is. (There is much written hither and yon about how gender and ethnicity complicate things even further; speaking with confidence as a woman can come off negatively in some environments, and for some cultural and ethnic backgrounds, it would be very rude. Whole books cover this better than I can here, but it’s worth mentioning.)

Well, it may be a common reality, but it certainly isn’t the best way to get innovation out of a community of coworkers. In environments like that, great ideas flower in spite of where they are, not because of it. The sad thing is, too many workplaces assume that “oh we had four great ideas happen last year, so we must have an excellent environment for innovation,” not realizing that they’re killing off hundreds of possibly better seedlings in the process.

I’ve managed smaller teams on occasion, sometimes officially and sometimes not, but I haven’t been responsible for whole departments or large teams. Managing people isn’t easy. It’s damn hard. It’s easy for me to sit at my laptop and second-guess other people with responsibilities I’ve never shared. That said, sometimes I’m amazed at how ignorant and self-destructive as a group some management teams can be. They can talk about innovation or quality or whatever buzzword du jour, and they can institute all sorts of new activities, pronouncements and processes to further said buzzword, but not do anything about the major rifts in their own ranks that painfully hinder their workers from collaborating or sharing knowledge; they reinforce (either on purpose or unwittingly) cultural norms that alienate the eccentric-but-talented and give comfort to the bland-but-mediocre. They crow about thinking outside the box, while perpetuating a hierarchical corporate system that’s one of the most primitive boxes around.

Ok, that last bit was a rant. Mea Culpa.

My personal take-away from all this hand-wringing? I can’t blame the ‘system’ or ‘the man’ for anything until I’ve done an honest job of playing by the un/written rules of my environment. It’s either that, or play a new game. To me, it’s an interesting challenge if I look at it that way; otherwise it’s just disheartening. I figure either I’ll succeed or I’ll get so tired of beating myself against the cubicle partitions, I’ll give up and find a new game to play.

Still, eventually? It’d be great to change the environment itself. Maybe I should go stand in front of my bathroom mirror and practice saying that with authority? First, I have to starch my shirts.