When I first heard about the Kozinski story (some mature content in the story), it was on NPR’s All Things Considered. The interviewer spoke with the LA Times reporter, who went on about how the judge had “published” offensive material on a “public website.”

I won’t go into detail on the story itself. But I urge anyone to take the LA Times article with a grain or two of salt. Evidently, the thing got started when someone who had an ax to grind with the judge sent links and info to the media, and said media went on to make it all look as horrible as possible. However, the more we learn about the details in the case, the more it sounds like the LA Times is twisting the truth a great deal. **

To me, though, the content issue isn’t as interesting (or challenging) as the “public website” idea.

Basically, this was a web server with an IP and URL on the Internet that was intended for family to share files on, and whatever else (possibly email server too? I don’t know). It’s the sort of thing that many thousands of people run — I lease one of my own that hosts this blog. But the difference is that Kozinski (or, evidently, his grown son) set it up to be private for just their use. Or at least he thought he had — he didn’t count on a disgruntled individual looking beyond the “index” page (that clearly signaled it as a private site) and discovering other directories where images and what-not were listed.

Lawrence Lessig has a great post here: The Kozinski mess (Lessig Blog). He makes the case that this wasn’t a ‘public’ site at all, since it wasn’t intended to be public. You could only see this content if you typed various additional directories onto the base URL. Lessig likens it to having a faulty lock on your front door, and someone snooping in your private stuff and then telling about it. (Saying it was an improperly installed lock would be more accurate, IMHO.)

The comments on the page go on and on — much debate about the content and the context, private and public and what those things mean in this situation.

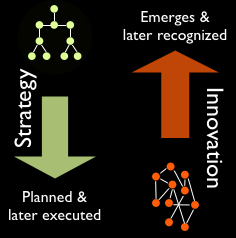

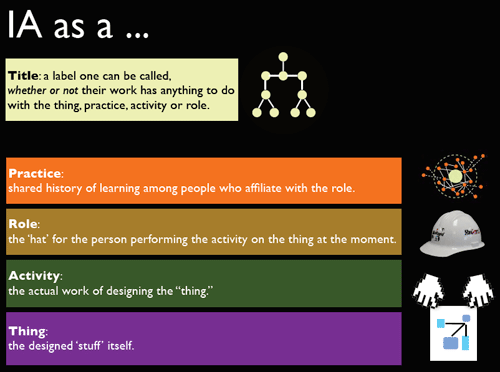

One point I don’t see being made (possibly because I didn’t read it all) is that there’s now a difference between “public” and “published.”

It used to be that anything extremely public — that is, able to be seen by more than just a handful of people — could only be there if it was published that way on purpose. It was impossible for more than just the people in physical proximity to hear you, see you or look at your stuff unless you put a lot of time and money into making it that way: publishing a book, setting up a radio or TV station and broadcasting, or (on the low end) using something like a CB radio to purposely send out a public signal (and even then, laws limited the power and reach of such a device).

But the Internet has obliterated that assumption. Now, we can do all kinds of things that are intended for a private context that unwittingly end up more public than we intended. By now almost everyone online has sent an email to more people than they meant to, or accidentally sent a private note to everyone on Twitter. Or perhaps you’ve published a blog article that you only thought a few regular readers would see, but find out that others have read it who were offended because they didn’t get the context?

We need to distinguish between “public” and “published.” We may even need to distinguish between various shades of “published” — the same way we legally distinguish between shades of personal injury — by determining intent.

There’s an informative thread over at Groklaw as well.

**About the supposedly pornographic content, I’ll only say that it sounds like there was no “pornography” as typically understood on the judge’s server, but only content that had accumulated from the many “bad-taste jokes” that get passed around the net all the time. That is, nothing more offensive than you’d see on an episode of Jackass or South Park. Whether or not that sort of thing is your cup of tea, and whether or not you think it is harmfully degrading to any segment of society, is certainly your right. Some of the items described are things that I roll my eyes at as silly, vulgar humor, and then forget about. But describing a video (which is currently on YouTube) where an amorously confused donkey tries mount a guy who was (inadvisedly) trying to relieve himself in a field as “bestiality” is pretty absurd. Monty Python it ain’t; but Caligula it ain’t either.