So, the short version of my point in this post (the “tl;dr” as it were) is this: possibly the most significant value of Second Life is as a pioneering platform for navigating & comprehending the pervasive information dimension in a ubiquitous/pervasively networked physical environment.

That’s already a mouthful … But here’s the longer version, if you’re so inclined …

It’s easy to dismiss Second Life as kitsch now. Even though it’s still up and running, and evidently still providing a fulfilling experience for its dedicated user-base, it no longer has the sparkle of the Next Big Thing that the hype of several years ago brought to it.

I’ll admit, I was quite taken by it when I first heard of it, and I included significant commentary about it in presentations and writings I did at the time. But after only a few months, I started realizing it had serious limitations as a mainstream medium. For one thing, the learning curve for satisfying creation was too steep.

Three-dimensional modeling is hard enough with even the best tools, but Second Life’s composition toolset at the height of its popularity was frustratingly clumsy. Even if it had been state-of-the-art, however, it takes special knowledge & ability to draw in three dimensions. Unlike text-based MUDs, where anyone with half decent grasp of language could create relatively convincing characters, objects, rooms, Second Life required everything to be made explicitly, literally. Prose allows room for gestalt — the reader can fill in the details with imagination. Not in an environment like Second Life, though.

Plus, to make anything interactive, you had to learn a fairly complex scripting language. Not a big deal for practiced coders, but for regular people it was daunting.

So, as Second Life attracted more users, it became more of a hideous tragedy-of-the-commons experience, with acres of random, gaudy crap lying about, and one strange shopping mall after another with people trying to make money on the platform selling clothing, dance moves, cars and houses — things that imaginative players would likely have preferred to make for themselves, but instead had to piece together through an expensive exercise in collage.

At the heart of what made so many end up dismissing the platform, though, was its claim to being the next Web … the new way everyone was supposed to interact digitally online.

I never understood why anyone was making that claim, because it always seemed untenable to me. Second Life was inspired by Neal Stephenson’s virtual reality landscape in Snow Crash (and somewhat more distantly, Gibson’s vision of “cyberspace”), and managed an adroit facsimile of how Stephenson’s fictional world sounded. But Stephenson’s vision was essentially metaphorical.

Still, beyond the metaphor issue, the essential qualities of the Web that made it so ubiquitous were absent from Second Life: the Web is decentralized, not just user-created but non-privatized and widely distributed. It exists on millions of servers run by millions of people, companies, universities and the like. The Web is also made of a technology that’s much simpler for creators to use, and perhaps most importantly, the Web is very open and easily integrated into everything else. Second Life never got very far with being integrated in that way, though it tried. The main problem was that the very experience itself was not easily transferable to other media, devices etc. Even though they tried using a URL-like linking method that could be shared anywhere as text, the *content* of Second Life was essentially “virtual reality” 3D visual experience, something that just doesn’t transfer well to other platforms, as opposed to the text, static images & videos we share so easily across the Web & so many applications & devices.

Well, now that I’ve said all that somewhat negative stuff about the platform, what do I mean by “what we learned”?

It seems to me Second Life is an example of how we sometimes rehearse the

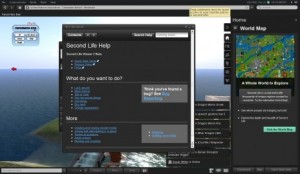

Recent version of the SL "Viewer" UI (danielvoyager.wordpress.com)

future before it happens. In SL, you inhabit a world that’s essentially made of information. Even the physical objects are, in essence, information — code that only pretends to be corporeal, but that can transform itself, disappear, reappear, whatever — a reality that can be changed as quickly as editing a sentence in a word processor.

While it’s true that our physical world can’t literally be changed that way, the truth is that the information layer that pervades it is becoming more substantial, more meaningful, and more influential in our experience of the world around us.

If “reality” is taken to be the sum total of all the informational and sensory experience we have of our environs, and we acknowledge that the informational (and to some degree sensory, as far as sight and sound go) layer is becoming dominated by digitally mediated, networked experience, then we are living in a place that is not too far off from what Second Life presents us.

Back when I was on some panels about Second Life, I would explain that the most significant aspect of the platform for user experience wasn’t the 3D space we were interacting with, but the “Viewer” — the mediating interface we used for navigating and manipulating that space. Linden Labs continually revised and matured the extensive menu-driven interface and search features to help inhabitants navigate that world, find other players & interest groups, or to create layers of permissions rules for all the various properties and objects. It was flawed, frustrating, volatile — but it was tackling some really fascinating, complex problems around how to live in a fluid, information-saturated world where wayfinding had more to do with the information layer *about* the actual places than the “physical” places themselves.

If we admit that the meaning & significance of our physical world is becoming largely driven by networked, digital information, we can’t ignore the fact that Second Life was pioneering the tools we increasingly need for navigating, searching, filtering & finding our way through our “real life” environments.

What a city “means” to us is tied up as much in the information dimension that pervades it — the labels & opinions, statistics & rankings — the stuff that represents it on the grid, as it is the physical atoms we touch as we walk its sidewalks or drive through its streets, or as we sit in its restaurants and theaters. All those experiences are shaped powerfully by reviews and tips of Yelp, or the record of a friend having been in a particular spot as recorded in Foursquare, or a picture we see on Flickr taken at a particular latitude and longitude. Or the real-time information about where our friends are *right now* and which places are kinda dead tonight. Not to mention the market-generated information about price, quantity & availability.

It’s always been the case that the narrative of a place has as much to do with how we experience the reality of the place as the physical sensations we have of it in person. But now that narrative has been made explicit, as a matter of record, and cumulative as well — from the interactions of everyone who has gone before us there and left some shadow of their presence, thoughts, reactions.

One day it would be interesting to compare all the ways in which various bits of software are helping us navigate this information dimension to the tools invented for inhabiting and comprehending the pure-information simulacra of Second Life. I bet we’d find a lot of similarities.